You don’t need an AI research lab — Follow these AI and API patterns

Generative AI has captured the world’s imagination. What does the AI trend mean for us who are building applications or digital products with APIs?

So much hype around AI — social media is full of AI startups, prompt suggestions, and LLMs. For a good reason, the technology is close to magical!

The underlying technology is not really new (I remember experimenting with the GPT3 API in late 2020), it was ChatGPT that has inspired the world. It has attracted 100 million users in 2 months, which makes it the fastest-growing technology ever. And now everyone asks: what does the AI trend mean for me, my company, my job, my products, and my applications?

In this blog post I want to address the question: What does the AI trend mean for us who are building applications or digital products with APIs? Maybe your boss has already approached you to suggest: “Let’s add this AI thing to our product.” Are there any patterns for building AI-powered applications – and what is the role of APIs? And what are some the basic architectural patterns that make use of the relationship between AI and APIs?

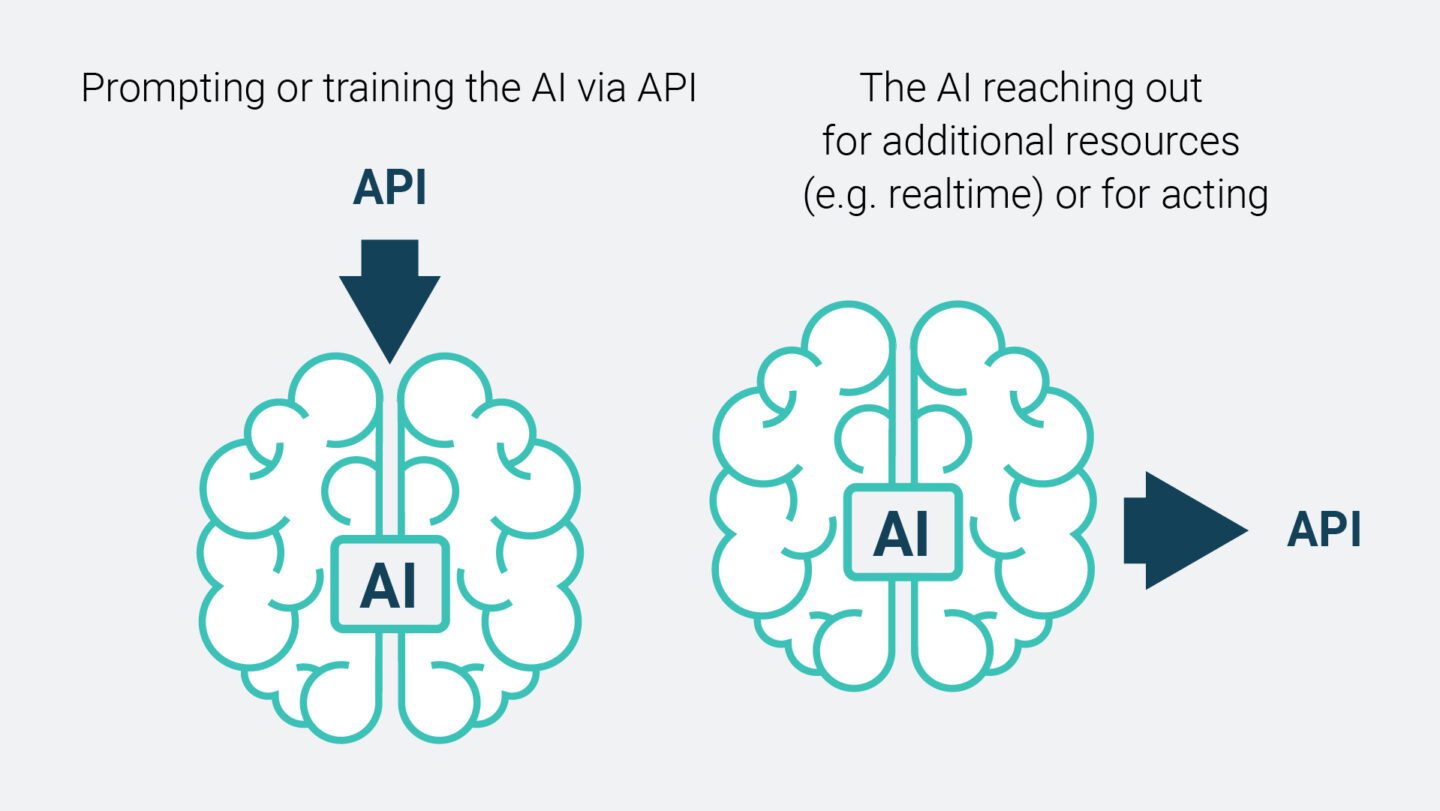

As we shall see, APIs play a very important role in connecting an AI-service to the world around it. In fact, connecting systems are what APIs are very good at. There are at least two basic patterns for the AI-API relation, and we will explore it in the following sections.

If you would rather watch than read, just start this video!

APIs democratize access to AI

We see big companies start an AI research lab and hiring data scientists to develop AI models. But what about small companies and organizations that do not have a dedicated AI research lab? Will they need to stand on the sidelines, while the bigger companies take advantage of the AI hype? Are AI innovations only limited to those companies that can afford an AI research lab, massive computing resources and GPUs, vast amounts of data, and the skills to train and build their AI models?

For many use cases of AI – especially those dealing with natural language – you don’t need a dedicated AI research lab that develops your own models for you. Instead, you can simply use an existing AI model and in particular existing AI-LLMs (Large Language Models). If required, prompt engineering, fine-tuning, and embeddings can be used to tailor these models to your specific use case.

How is this possible? One word: APIs.

APIs are packages that hide the complexity of what is inside the package. With an API, it is possible to package AI models and hide all the complexity behind an easy-to-use interface of an API. By means of the API, AI models become available to all programmers, that don’t need to be experts in AI. If an AI-LLM got packaged as an API, all you have to deal with as a developer are APIs. Now you need to be good at APIs – not necessarily LLMs.

APIs allow for the separation of concerns and competencies. Few specialized data scientists create AI models and package them as an API (e.g., OpenAI). Many developers, who don’t need to be AI experts, can use these AI models, integrate them into their applications, and build “smart” applications that can deal with natural language.

The result: APIs democratize access to powerful AI models.

Developers from big and small companies have access to AI models and can leverage AI in their applications. As a developer, you need to be good at calling AI-APIs and you need to learn to use them in the right way.

AI and API Patterns for Modern Applications

Modern applications will leverage both AI and APIs in some form:

- AI helps to make applications “smart”, especially LLMs help to make applications understand human language and its underlying intent as input

- APIs help to access data and connect systems

These two technologies won’t just stand next to each other and be used separately. They are especially powerful when combined synergistically. Which patterns for combination are there?

As we shall see, APIs play a very important role in connecting an AI-service to the world around it. In fact, connecting systems are what APIs are very good at. There are at least two basic patterns for the AI-API relation, and we will explore it in the following sections.

Pattern 1: Call AI services via API

How does the AI receive its input from its environment? It needs a prompt. And if you want to integrate the AI into your application, the application needs to deliver the prompt as input to the AI. And it does this via the API. Via an API, applications can send prompts, tasks, and input to the AI.

AI models are often packaged in the form of an API. For example, the AI model behind the well-known ChatGPT is available as an API, and many other AI models are also served as an API. The API allows us to trigger the AI and send our prompts as input. Via APIs, developers can integrate AI into their applications, and make their applications “smart”.

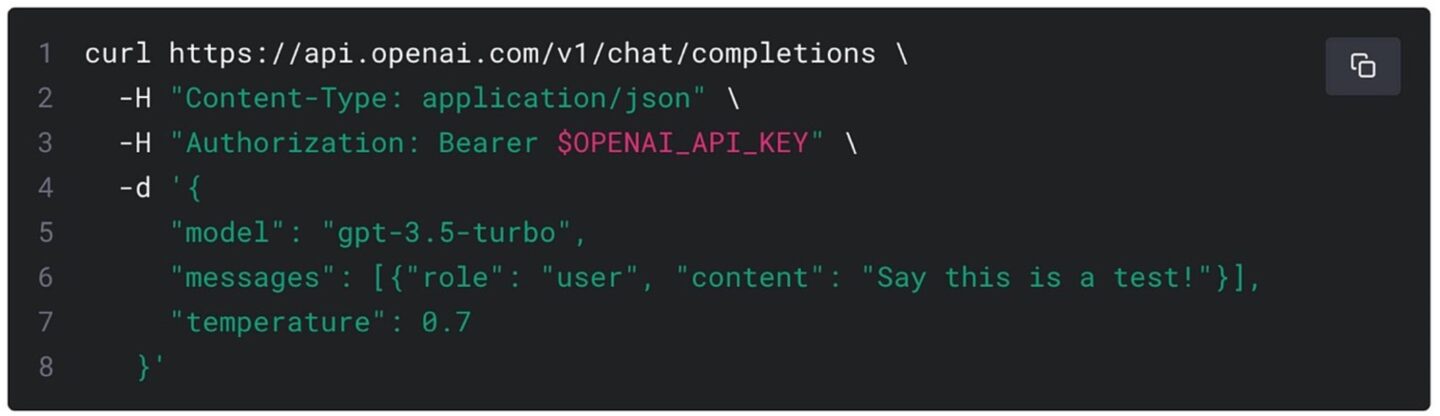

If this sounds too abstract and philosophical, here is the code for an API that triggers the AI. The following API request (shown as a curl call) triggers the AI model with the input prompt “Say this is a test.”

API call to trigger the GPT3.5Turbo AI model with a prompt (Source: OpenAI)

The AI receives the prompt and computes its completion as output. The output corresponding to this API call responds with: “This is a test.”

Pattern 2: AI services call APIs

When an AI model computes its response to a prompt, the output is typically some text. I like to compare the text output of the AI to an idea or a thought we might have in our head – an idea is quite abstract, and we have a lot of ideas. We humans evaluate our ideas and put some of them into action because we know that good ideas only have an impact when they are followed up by actions.

For us humans, it means that the brain tells us to act, e.g., to do something with our hands in order to change our environment. For example, if we feel warm, we move into the shade or switch on the air conditioning. We act. Ideas are nice, but they alone don’t change or improve the situation. Only by acting on the ideas can we improve the situation. It’s quite simple. What is the corresponding construct that would allow an AI to act? How can an AI-service put ideas that were computed by an AI-model into action?

In short: By calling APIs.

APIs are the hands of an AI-service, that allow it to act.

APIs allow to trigger actions in the real or digital world, such as making a payment, sending a text message, or setting the thermostat to set the room temperature. If the AI-service can call APIs, it can break out of its bubble of text output, translate ideas into actions and allow the AI to act in its environment.

And if this sounds too philosophical, here is the code:

Here I will show you how ChatGPT can call APIs. The mechanism is called ChatGPT Plugins and consists of (1) an API implementation, (2) the OpenAPI specification, and (3) a manifest file that links it all together and provides an overall descriptor of the API, so the AI can determine for which requests it shall call the API.

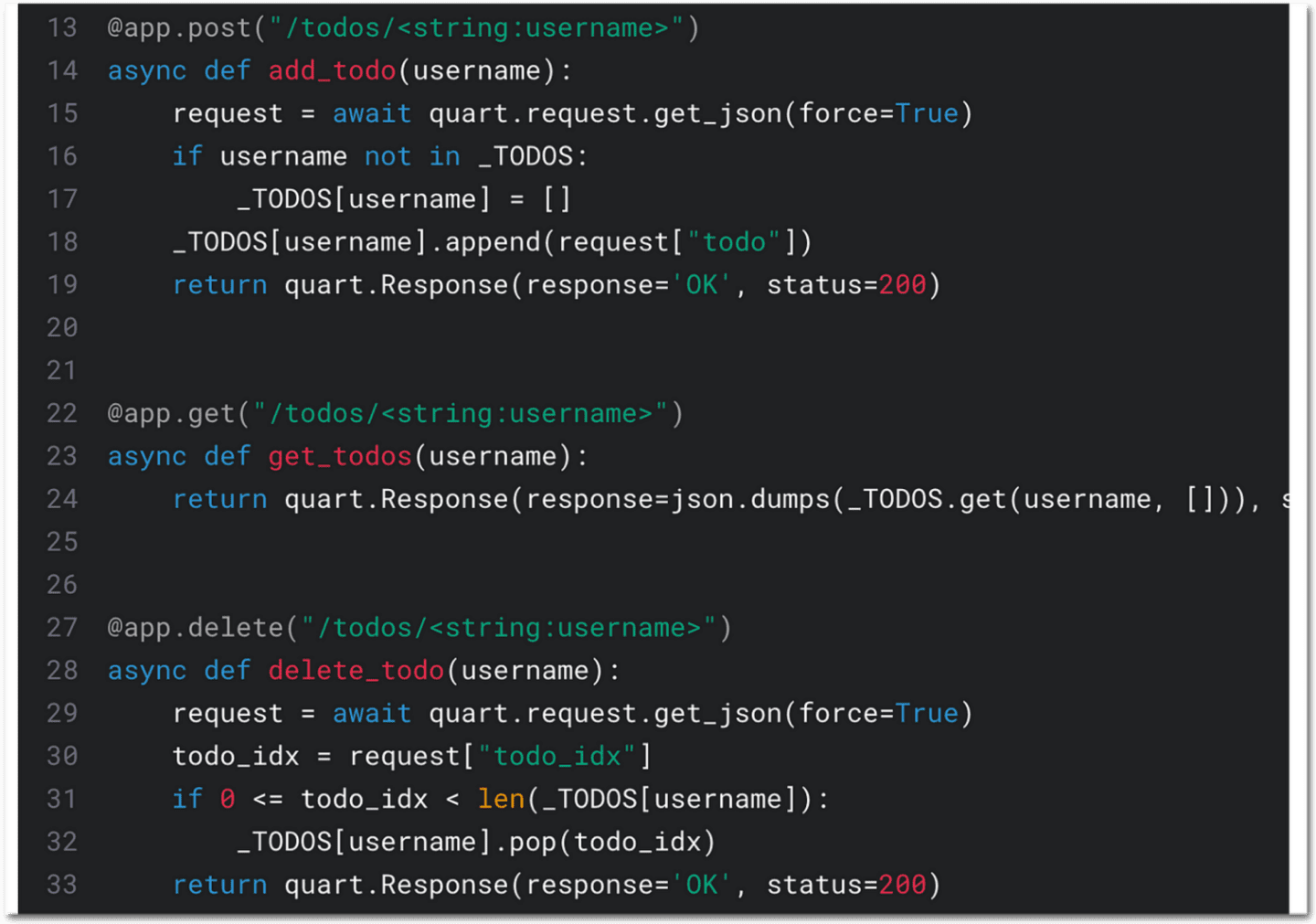

In the following, I show some sample code snippets of a ChatGPT Plugin that manages a TODO list (all source code is by OpenAI).

The first code snippet is a part of the implementation of an API for managing TODO lists.

API implementation for a ChatGPT Plugin (Source: OpenAI)

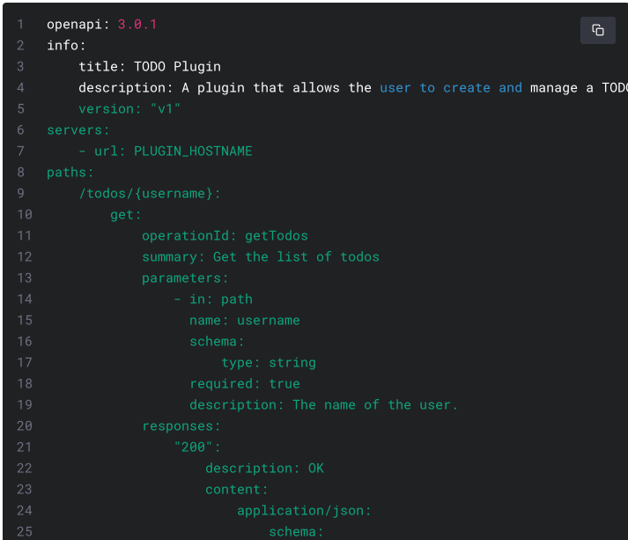

This API for managing TODO list items has a specification in OpenAPI 3, which I show in the following code snippet.

API specification for a ChatGPT Plugin (Source: OpenAI)

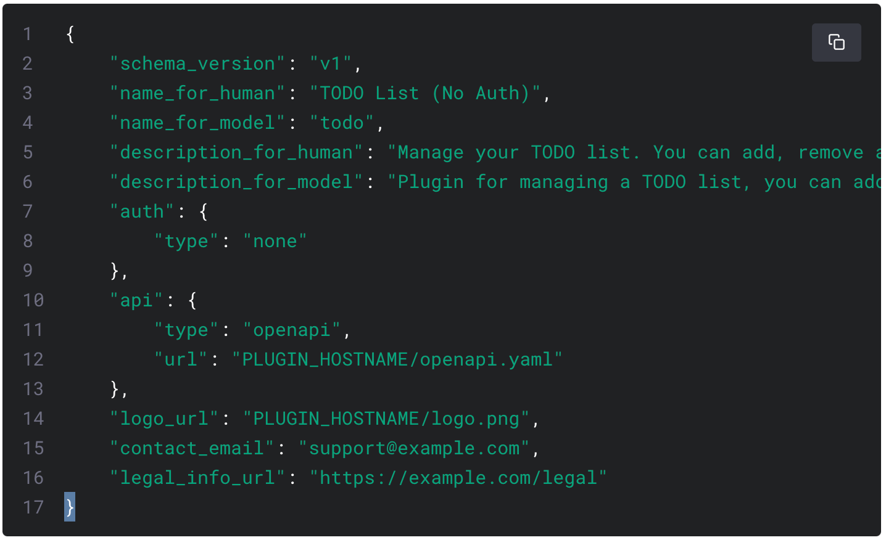

And finally, I show you the code snippet of the plugin manifest file that binds implementation, spec, and descriptions together.

Plugin manifest for a ChatGPT Plugin (Source: OpenAI)

When the plugin is activated and a prompt mentions anything around managing the TODO list (adding, removing, or updating a TODO item), the API will be called with the appropriate parameters.

With this API, the AI-service got the capability to act in the digital world by adding, removing, or updating TODO list items. As a result, the API can be considered the hand of the AI.

Safeguarding API usage

When an AI can call APIs that trigger actions in the real or digital world, we need to put some safeguards in place. And the API or an API management system is probably a good place, where such safeguarding can be defined and enforced. Safeguarding API usage in an AI context needs to be studied carefully – and likely exceeds any capabilities that are typically available today. It is an interesting field of research!

Summary: Two AI-API Patterns

APIs and AI technologies need to be applied synergistically to create powerful applications. In fact, we uncovered two patterns for using AI and APIs: (1) When an application integrates AI functionality, it typically calls an AI-API and passes input in the form of a prompt. And (2) When an AI-service needs to get an action done, or just get some real-time data, it might call an API.

When building applications that leverage AI, these two patterns might be used – potentially even in combination, and these patterns can even be orchestrated. I will explore it in one of my next posts. Stay tuned.