Bridging the Gaps in LLMs with Your APIs

In human history, tool usage was a significant evolutionary milestone, and as LLMs start using tools (a.k.a. APIs), this could become just as significant. How does “LLM tool usage” work and what should you do now to avoid standing on the sidelines of this AI revolution?

The ability to create and utilize tools to overcome challenges marks a decisive moment in human evolution, dating back over 3.3 million years. To this day, we remain fascinated by highly intelligent animals that display signs of this ability.

Large Language Models (LLMs) excel in generating both natural and programming languages, not less but also not more. They are like a person offering an opinion on everything without ever really doing anything, hence termed as “brains without hands.” However, models have started using tools, parallelling the massive impact tool usage had on human progress. It’s as if we are currently witnessing the moment of the machine sharpening a stone to fashion a tool, like a knife, for cutting food.

This article serves an easy-to-understand introduction to LLM tool usage and highlights the most important use cases.

A simple example

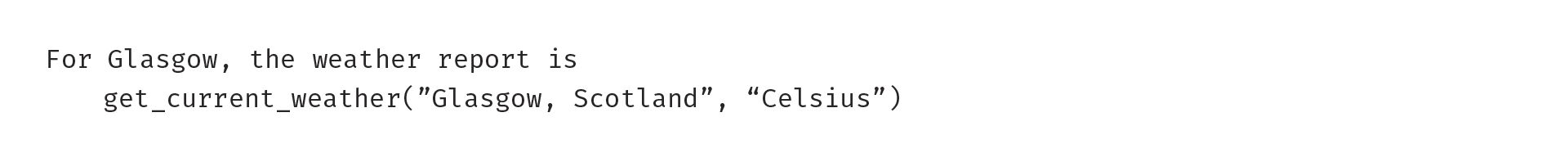

Try asking an LLM a prompt such as: “What is the weather in Glasgow, Scotland?” The answer will be well phrased, use the correct terminology to describe the weather, and may even describe the typical weather found in Scotland…but it’s likely to be inaccurate. That is because LLMs are trained on historical data — maybe even historical weather data — but they do not know anything about the current weather. And if you are getting ready to leave the house, the current weather is really all that matters to you. As you can see, there is a significant gap in the LLM’s capability — it lacks access to up-to-date data. In this case, today’s weather.

This problem extends beyond weather queries; there are many examples of LLMs exhibiting similar knowledge or capability gaps. Many of these gaps can be filled by “using tools” — executing an appropriate function call (mostly API calls) as part of answering a prompt. Tools augment and complement the capabilities of LLMs and help overcome the fundamental limitations of LLMs.

The basic mechanics of how LLMs use functions

The technology is nascent and lacks standardized terminology. This is referred to as “function calling”, “tool usage”, “API invocation”, and more. For clarity, let’s refer to it as “function calling” here.

For an LLM, function calling involves three fundamental steps:

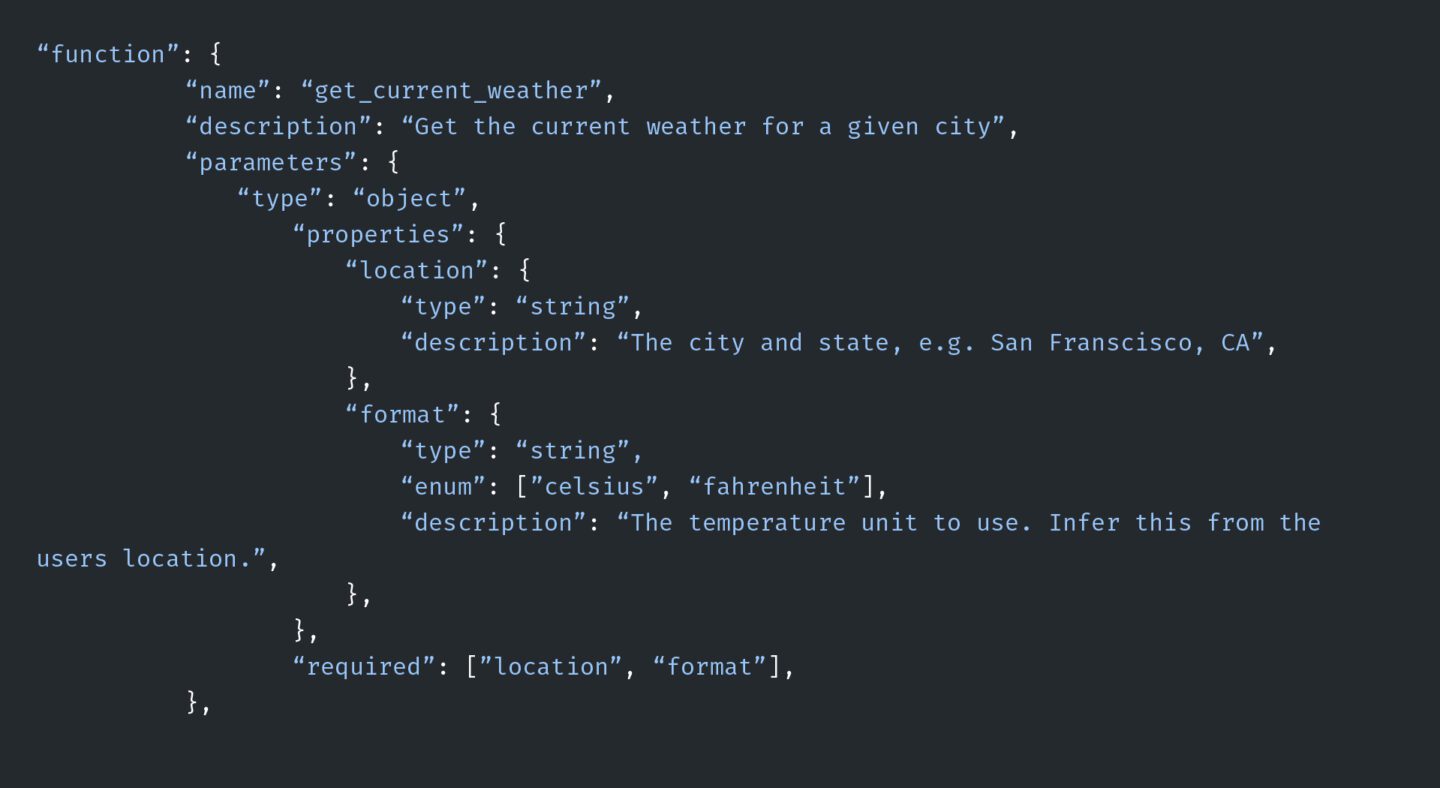

1. The model is given information about one or more functions (their “signature”).

It consists of both the technical syntax (e.g. GET /weather?city={cityname}) and the semantics, the meaning of the function and its attributes (e.g. “Get the current weather for a given city”). The semantics is provided through natural-language descriptions (which is what LLMs are good at understanding!).

The signatures can be provided at different times:

- At runtime of the model, as an addition to the prompt that asks for the tool usage

- At runtime of the model, as a configuration, maybe as a “plugin” prior to the prompt that would use the function (e.g. realized as “embeddings” with semantic search)

- At the time of training of the model

We will look into the implications of these different design options further below.

A function forecasting the weather often serves as the ubiquitous “Hello World” example for function calling. The signature might look like:

2. The LLM system identifies the tool.

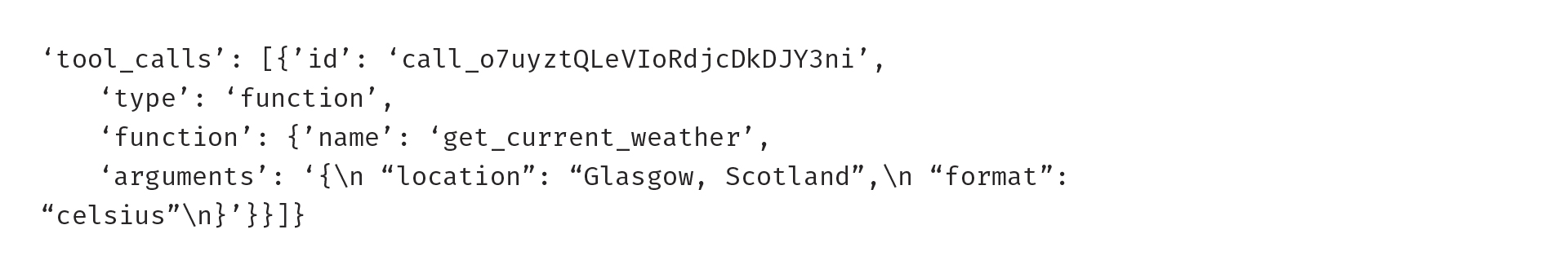

When a user submits a query (prompt) to the LLM system (like “What is the weather in Glasgow, Scotland?”), the system determines if and which function could help respond to the query. Optionally, the user can request the LLM to use a preferred function if known.

3. The LLM system responds in one of the following ways (referred to later as “3a” to “3d”):

- It generates and returns the function call (hopefully with correct syntax) but does not actually invoke the function. For example:

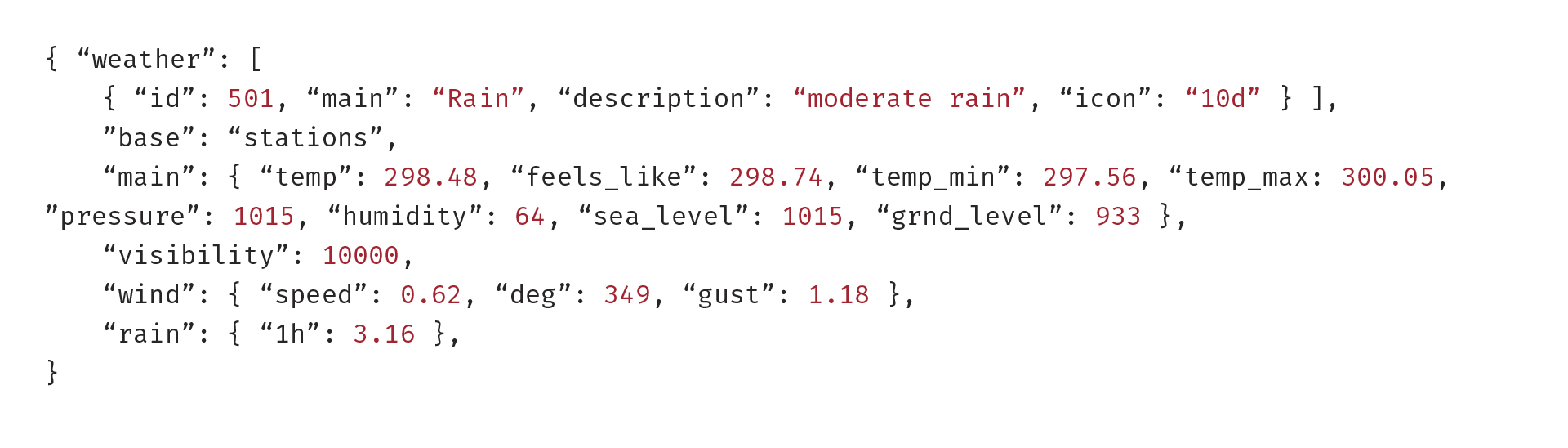

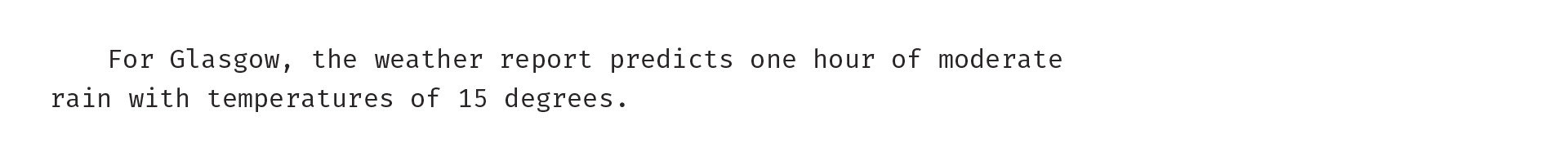

- It invokes the function and returns the result. For example:

- It generates a natural language response that includes an un-executed function call:

- It generates a natural language response, invokes the function, and embeds the result:

Generating the right syntax is crucial. Users do (or at least they should!) accept imprecisions in natural-language responses from LLMs. However, function interfaces (APIs) are by nature precise, and any syntax error will cause the call to be rejected. The success of function calling will depend on the ability to go from the (imprecise) world of natural language prompts to the (precise) domain of functions.

How to tell an LLM about a function

As with all artificial neural networks, LLMs know two fundamental ways of interaction: model training and model usage (“inference”).

The simplest way to tell an LLM about a function is to describe it directly in the prompt during model usage (option 1a). In this approach, function calling is not more and not less than a sub-discipline of the art and science of prompt engineering. Both the advantages and disadvantages are obvious. On the upside, everybody with access to an LLM can do this. The community can jointly develop the best prompts, always with the latest and greatest LLM under the hood. On the downside, the function cannot actually be invoked this way (no 3b or 3d). Generating the right syntax might be error prone. Also, there are limits to the number of functions that can be declared due to prompt size.

The second option is to provide a configuration to the LLM service (option 1b). This approach is an example used by the ChatGPT Plugin concept. Here tools are described in manifest files and are loaded when the model is started.

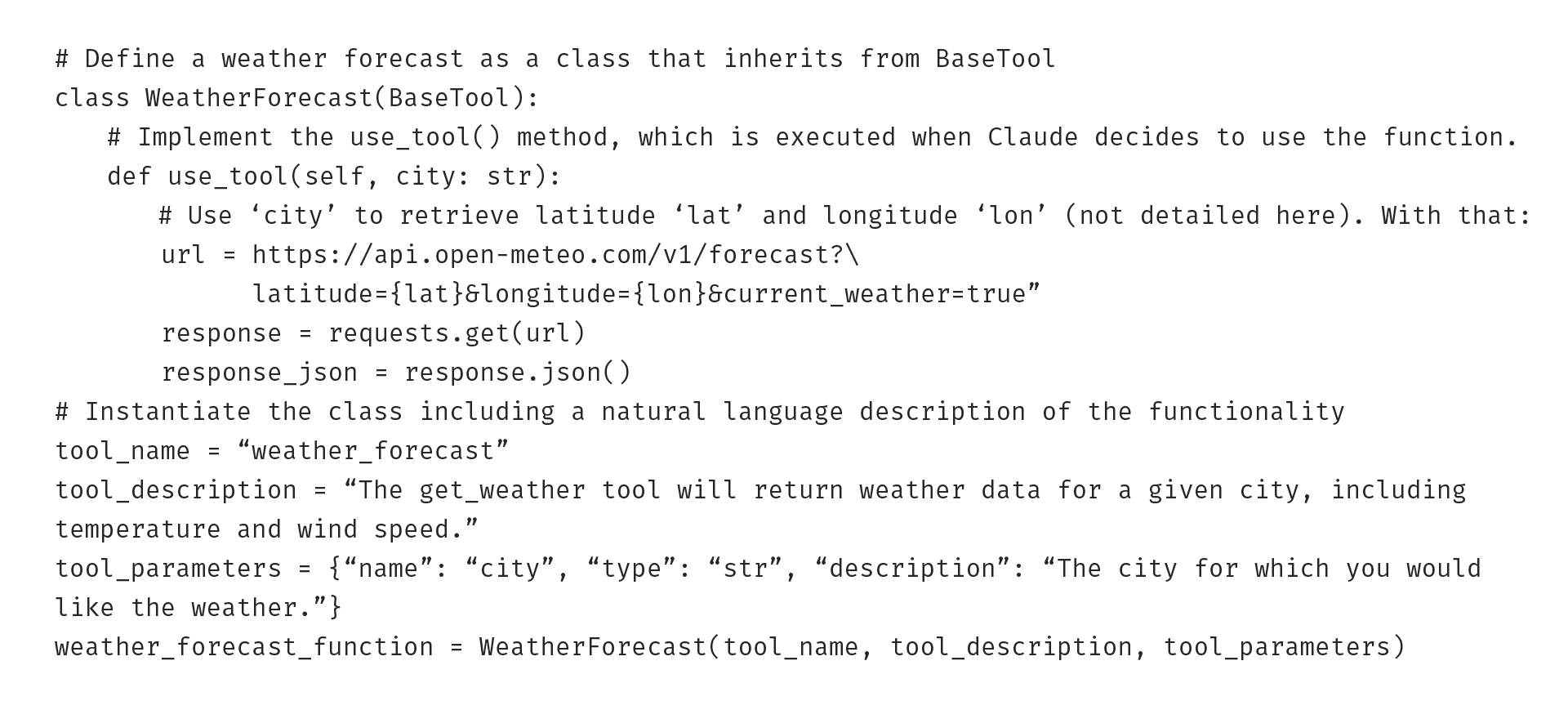

Let’s look at Anthropic’s LLM “Claude” as another example, as it provides an early (alpha) version of function calling. It requires programming skills, however, and this cannot be achieved by entering a prompt in a browser UI alone.

To make this work, Anthropic provides a Python base class called BaseTool. To teach the model a function, developers need to define and instantiate a class, say, WeatherForecast, as a subclass of BaseTool. They must also implement the method use_tool() which invokes the actual function. Something like (abbreviated):

That’s all. End users can now submit prompts and Anthropic’s “function calling” will provide full automation including embedded responses (3d). Under the hood and hidden from the end user, it uses prompt augmentation. This approach has various advantages compared to the first option. Function declaration and prompt engineering are separated and hidden from end users. Syntax errors when generating the API are ruled out (a major advantage). APIs are actually invoked and results are embedded. Everybody with a computer running Python can do this. The downsides are that programming skills are required to describe the tool (not rocket science though), and that this solution is in early stages at the time of this writing.

The third option is to train a model on the functions upfront (option 1c). This scales to any number of functions. In fact, an organization with deep pockets could attempt to train a model with all functions known to the internet. This is a mind-boggling thought. The resulting LLM could not only “have an opinion” about “everything” but actually do “everything” — within the limits of publicly available functions.

There are downsides as well. On the scale of the internet, new functions are continuously becoming available, and existing functions are changed. Keeping them up to date in the model requires re-training it, which is a much higher hurdle to overcome than reacting to changing signatures in the previous approaches. So, while functions deliver up-to-date info, their signatures might be outdated. This reduces the up-to-datedness of the entire solution, compromising one of the primary objectives of function calling.

Key use case categories

We see four categories of use cases for tool usage in LLMs.

1. Retrieval of real-time and short-lived data

An LLM is based on historical data it was trained on. Functions can fill this gap by providing the necessary real-time or newly generated data. Retrieving weather information is a use case from this category.

2. Interpretation of complex queries and finding appropriate sources

Actually, the query “What is the weather in Glasgow, Scotland?” underuses the language model — arguably such a direct request could be handled equally well without a language model in the mix. But language models can interpret much richer and more indirect queries as well, for example “What should I be wearing outside in Lienz tonight?” Bing Chat gives the convincing answer:

I’m glad you asked! According to my search results, the temperature in Lienz tonight is expected to be around -2°C. It’s going to be cold outside, so I recommend wearing warm clothing such as a thick coat, gloves, and a hat to keep yourself comfortable. You might also want to consider wearing waterproof boots if there’s a chance of snow or rain. Stay warm and enjoy your evening!

(Note: Whether Bing Chat actually used function calling here is doubtful, though! It cited TripAdvisor as the source for this, not a primary source for up-to-date weather.)

This is so much more than the answer to a direct question; the LLM associates “wearing things outside” with “picking clothing that fits to the weather”, and it associates -2°C with corresponding clothing. LLMs can not only find the right function in straightforward cases, but also use their language understanding in finding the right function that the end user may not even have considered. Once models trained on huge amounts of APIs are available, it will be amazing to see the combination of LLM’s “semantic association” with the precision and timeliness of function results.

3. Addition of functions that neural nets are not well suited for

Function calling will simply come to the rescue for tasks that LLMs are intrinsically bad at. It’s been about a year since we discussed this in “What ChatGPT is bad at.” For example, the LLM could use WolframAlpha for mathematical problems. We described the challenge of using Generative AI for things it is good at while using other tools for a different set of problems. In fact, we referred to this ability as an element of “AI literacy” that we all need to urgently develop. Function calling is still very young, but someday we may have a single system that can do both, so we do not have to make the decision upfront about what to use.

4. Triggering actions in the real or digital world

Last but not least, LLMs by themselves cannot perform actions in the world. Functions, however, are the missing hands of the LLM, that can execute actions in the digital (or indirectly in the real) world, such as paying a bill, booking a flight, or sending a text message.

Among these four use case categories, some may hold relevance for your customers. How can you ensure that your customers can effectively actualize these use cases?

Be part of the evolutionary milestone…

As LLMs integrate tools, akin to humans utilizing tools in evolution, it’s essential to position oneself to partake in this evolution and profit from it, and not find oneself on the sidelines?

It is a simple process: (1) Make tools, i.e. your apps, data, and capabilities, available via APIs and (2) ensure that the tool is used, i.e. the LLMs can find, leverage, and execute your APIs because it is published, well-documented, managed, and governed.

Without APIs, the LLM cannot use your data and functionality, your digital assets will be invisible to the LLM, and you might be on the sidelines of the Cambrian explosion of AI development. With the advent of LLM tool usage, APIs have become even more important than we previously gave them credit.

We cannot wait to see what LLMs will become capable of when they use your APIs!