Understanding the Role of Data Integration and Application Integration

Learn how to navigate the integration maze. Break free from category confusion and focus on business objectives, not tool labels.

Have you been active in the integration space in the last two decades (like me)? Then you probably participated in too many too-theoretical discussions (like me) centering around questions such as “Are we doing application integration or data integration, actually?”, “Our integration efforts are costly; are we doing something fundamentally wrong / do we need to ‘rethink’ integration?”, “Are we missing the boat on new paradigms like Microservices Architecture or Event-driven Architecture?”, and “Do our tools make us go about integration in the wrong way?”

The terms “application integration” and “data integration” add to the confusion as they are not particularly clear-cut. This is especially true for “data integration,” because isn’t IT always about processing data (as the old-fashioned term “Electronic Data Processing” implies as well)? Two applications are integrated by taking the output of the first, reformatting it, and invoking a suitable function of the second with that. Certainly, data flows from one to the other, yet this is normally not called data integration.

Nevertheless, the terms are firmly established in the market, not the least by analysts defining corresponding software and market categories. They are thus used by Software AG as well. It is, of course, beyond my reach to change the terms, but this contribution sets out to resolve some misunderstandings.

My fundamental suggestion is to give up thinking in terms of integration “categories” in favor of the fundamental business objectives your integration solution should achieve.

Companies usually have neither ‘application integration’ departments nor ‘data integration’ departments — and they shouldn’t. However, they do perform business operations and business intelligence/analytics, with organizational structures reflecting that distinction.

Integration for Operational Purposes

Business operations executes the business processes that realize a company’s business purpose. Process participants include employees, machines, and things of the IoT, applications, partners, customers, and more. Process tasks have a transactional character: They forward the object of the process from one state to the next. Data can be the object of the process itself, e.g., a loan application being processed. It can also be an informational representation of the physical object of the process, e.g., data describing a car built on the production line.

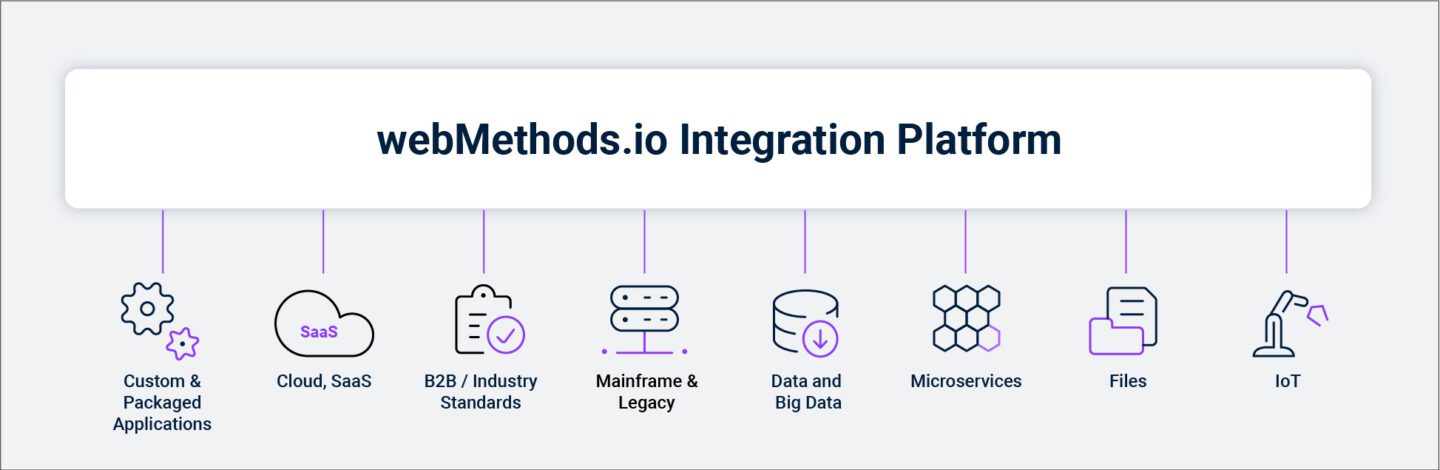

IT integration tools supporting this have to be versatile. They must be able to integrate two applications, as sketched above. They often need to implement and execute entire process chains. They also need to interface with and support manual tasks, as well as communicate with business partners using industry standards.

Again, the focus here is on the transformation of business objects, not the storage or physical movement of data. But of course, part of the job may be to store data (often temporarily) and archive it. Even moving flat files around remains a surprisingly widespread requirement in real-world business scenarios.

The ‘Swiss Army Knife’ integration tools for this are often referred to, rather abbreviated, as ‘application integration.’

Integration for Analytical Purposes, and Choosing the Right Tool

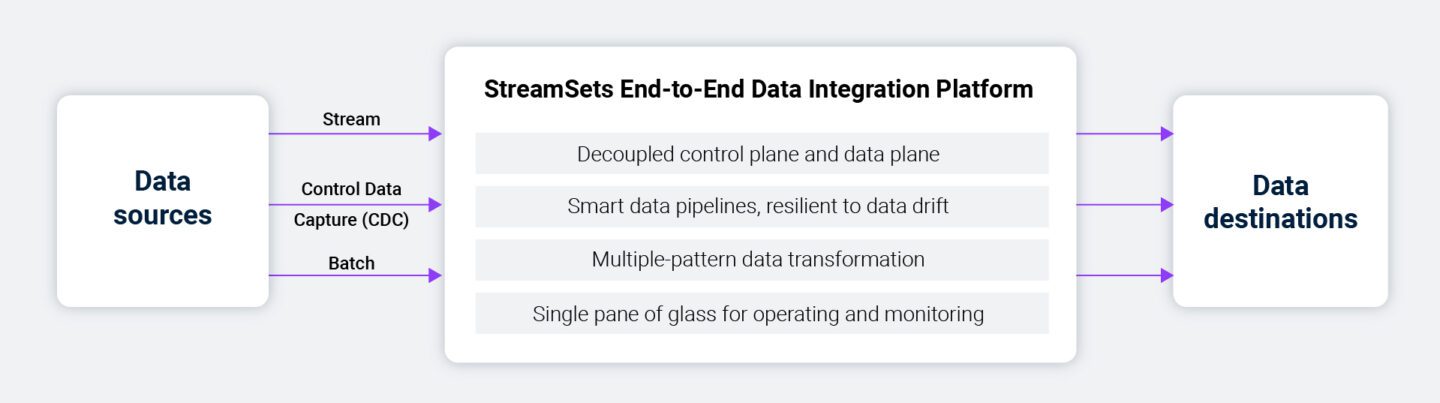

Business intelligence/analytics has a different objective: Gain business insight for better decision making based on data. However, data exists in many different places and formats. So, it must be brought together first, either physically in a single database/data lake, or virtually in the form of a single metamodel. Second, heterogeneous data must often be pre-processed to be analytics-ready. Analytics tools can deal with certain inconsistencies, e.g., they can associate data from two tables by date even if the tables use different date formats. But factoring in complex, semantic relationships typically requires data preprocessing.

Important capabilities for a tool supporting this domain are (i) moving massive amounts of data from a wide variety of data sources, (ii) providing complex data transformation patterns (prominently ETL and ELT), and (iii) the ability to integrate data that is constantly growing and which format is subject to frequent change (‘data drift’). The nonfunctional requirements have their own characteristics too. Beyond volume (massive amounts), they also deal with consistency, latency, and security.

Tools focusing on these tasks are called ‘data integration’, again a term that is more than a bit unspecific. Is there an overlap with ‘application integration’? Certainly! Every good application integration tool comes with a database adapter that can be used to unload data from a database, convert it, and load it to another — as any data integration tool would. Also, if an API of a business application supports CRUD (Create, Read, Update, and Delete) operations on a business object (as in create a loan application, update a loan application…), these may serve both operational and analytical needs.

This is not to downplay the differences between the two types of tools. Application integration is the Swiss Army Knife that supports the transformation of business objects across a broad variety of participants, while data integration excels at handling massive amounts of data and dealing with the constant change of data at rest.

In real life, the BI department uses a data integration tool, while business operations use a universal application integration tool for their tasks — including some everyday data handling jobs. However, they might employ data integration tools as well for specific requirements.

It might sound attractive to have a single master tool that provides a superset of all integration capabilities. However, such a tool would be rather overwhelming to any actual user. Operational and analytical users would use mostly distinct features, so the advantages of having a single tool would be minor, not even considering the important aspect of cost. As an analogy, look at Microsoft Office with Word, Excel, and PowerPoint.* Of course, there is functional overlap of the three. Yet, we all have learned which tool to use for which task. We value a unified platform with central identity and access management (IAM), aligned look and feel, and the possibility for the tools to collaborate (e.g., pasting a subset of an Excel sheet into a Word document), but seldom to never do we miss having a single tool.

To summarize: In the integration world at large, don’t waste your time in discussions about the semantics of confusingly named tools and market ‘categories.’ Instead, establish a set of functional and non-functional integration requirements by key roles in your organization. Find a unified platform supporting those.

The Role of Architecture

This topic cannot be concluded without at least mentioning architecture. Strategic development of a company’s application landscape, service landscape, process landscape, and data landscape requires this corresponding ‘landscape planning.’ Better put, it requires IT and Enterprise Architecture, which in turn must be based on overarching architecture standards. Service-oriented architectures are an early example, later ones include event-driven architectures and microservice architectures.

It is beyond the scope of this contribution to discuss these standards in detail. What is important to understand: All of them are paradigms. They can’t be bought as tools. Architecture leads through principles and requires the understanding, the discipline, and the governance to work according to certain methods and procedures. Only then come tools into play which support these methods and procedures.

Do not neglect architecture. Develop a vision for application, service, process, and data architecture in your enterprise. Establish standards and governance that suit your needs, not just because they are the talk of the day. Avoid large architecture projects intending to change the entire IT without immediate business benefit. The corresponding target architectures are rather something that evolves over time, so embrace the never-ending process of improvement toward an architectural vision.

How a Super iPaaS Brings Application and Data Integration Together

The functions of application integration and data integration tools are separate but have synergies when used together. For example, on the operational side, historical data can be ingested and transformed by data integration tools and activated through application integration. On the analytical side, the scope of data available for business intelligence can expand to include real-time access to application data. Another term to add to your lexicon is a new category of platform that offers both application integration and data integration — a “Super iPaaS.” With independent components that operate within a unified platform, both operational and analytical business users will benefit.

*Microsoft, Excel, and PowerPoint are trademarks of the Microsoft group of companies.